Machine Learning 1.0.

In today’s post we are going to talk about one of the branches of study that is gaining more and more popularity within computer science: Machine Learning.

Many of the services that we use in our day to day such as Google, Youtube, Netflix, Spotify or Amazon use the tools that Machine Learning offers them to achieve an increasingly personalized service and thus achieve competitive advantages over their rivals.

In Machine Learning, computers apply statistical learning techniques to automatically identify patterns in data. These techniques can be used to make predictions with high precision.

The first process that Machine Learning involves is data exploration. At this stage, statistical measurements and data visualization are usually very useful, for example, 2 and 3-dimensional graphs to have a visual idea of how our data behaves. At this point we can detect outliers that we must discard; or find the characteristics that have the most influence on making a prediction. It is important to carry out a pre-analysis to correct the cases of missing values and try to find a pattern in them, making use of human skills, which facilitates the construction of the model.

Why is it important to explore / visualize the data?

Having a lot of data generates good generalizations, which leads to good results even with a simple algorithm. But there are certain dangers or warnings to be aware of:

- Sometimes having more data leads us to have worse results.

- The quality and variety of the data is important.

- A learning algorithm with adequate performance is always necessary.

- The most important thing is to extract the useful characteristics of the data.

- There is no Machine Learning algorithm that reads the raw, unexplored, and processed data and returns the best model.

Attribute selection is the process by which we select a subset of attributes (represented by each of the columns in a dataset in tabular form) that are most relevant for the construction of the predictive model we are working on. Dimensions in a data set are often called characteristics, predictors, attributes, or variables. Data visualizations help us in making this selection. Here are some of the most used visualizations:

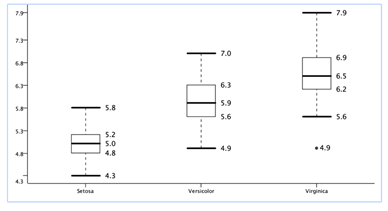

Box Plots: They show the distribution of a single dimension partitioned by the target class.

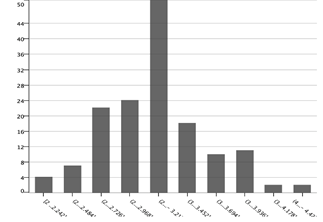

Histogram: Shows the diffusion of a single feature. Put the values in boxes and show the frequency. The number of boxes depends on the breadth you are interested in taking.

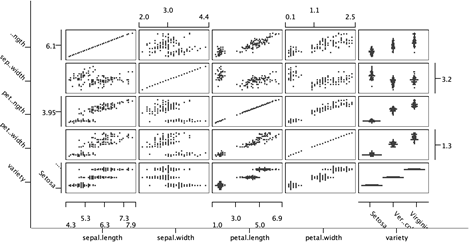

Scatter matrix: Shows multiple relationships on a graph. It allows you to visualize the relationships between each pair of dimensions since adding another dimension allows you to see more nuances.

When looking for patterns in data is where machine learning comes into play. Through visualizations we can see patterns in the data, but the limits to delineate them are not so obvious. The methods employed by machine learning use statistical learning to identify these limits. There are three types of learning:

Supervised learning

In supervised learning, we are given a set of data and we already know what our correct output should be, having the idea that there is a relationship between input and output.

Supervised learning problems are classified into “regression” and “classification” problems. In a regression problem, we are trying to predict the results within a continuous output, which means that we are trying to assign input variables to some continuous function. In a classification problem, we are instead trying to predict the results on a discrete output. In other words, we are trying to assign input variables into discrete categories.

Unsupervised Learning

Unsupervised learning allows us to approach problems with little or no idea of what our results should look like. We can derive the data structure, where we do not necessarily know the effect of the variables, by grouping the data based on relationships between the variables.

With unsupervised learning there is no feedback based on the prediction results. The idea is that the algorithm can find patterns by itself that help to understand the data set.

Reinforcement Learning

In reinforcement learning problems, the algorithm learns by observing the world around it. Your input information is the feedback you get from the outside world in response to your actions. Therefore, the system learns by trial and error.

The process of creating a model is also known as training a model. This is where we really start to use Machine Learning techniques. At this stage we nourish the learning algorithm (s) with the data that we have been processing in the previous stages. The idea is that the algorithms can extract useful information from the data that we pass to it and then be able to make predictions.

The algorithms that are most often used in Machine Learning problems are the following:

- Linear regression

- Logistic regression

- Decision Trees

- Random Forest

- SVM or Support Vector Machines.

- KNN or K nearest neighbors.

- K-means

All of them can be applied to almost any data problem and are all implemented by the excellent Python library, Scikit-learn.

The next step would be to evaluate the algorithm. In this stage we test the information or knowledge that the algorithm obtained from the training of the previous step. We evaluate how accurate the algorithm is in its predictions and if we are not very satisfied with its performance, we can go back to the previous stage and continue training the algorithm by changing some parameters until we achieve acceptable performance.

And here the post today, if you want to continue learning Machine Learning, don’t miss my next posts.

If you want us to help your business or company, contact us at info@aleson-itc.com or call us at +34 962 681 242.

Business Intelligence Senior Consultant.