Discovering Microsoft Fabric

Welcome to a new article in the best Cloud and Data blog. In today’s post we talk about one of the most popular topics in recent weeks: Microsoft Fabric.

What is Microsoft Fabric?

Fabric is a comprehensive set of tools that enables customers to store, manage, and analyze the data driving their most critical applications.

It also integrates products that meet the needs of all data users in a company, from engineers dealing with the technical aspects of data processing to analysts who want to gain insights and make decisions from data. With Fabric, Microsoft has taken its offering to a new level of integration and ease of use.

Why is Microsoft Fabric different?

It’s Microsoft’s way of simplifying and unifying its data architecture with a single Data Lake, called OneLake, that can store and enable access to all kinds of data from different sources and applications.

This approach offers significant benefits to customers in terms of cost savings, transparency, flexibility, governance and data quality.

OneLake is designed to be the central repository for not only data generated by Microsoft’s own software services, but also data from external sources, such as third-party applications. It also provides a consistent experience and interface for users, regardless of the type or format of the data.

Over the years, tech companies have purchased or developed dozens of software tools for various data and analytics-related tasks, including business intelligence, data science, machine learning, and real-time streaming. But to a large extent they have pieced together these tools, without creating a cohesive and seamless platform.

As a result, customers have to deal with a complex and fragmented landscape of tools and databases, each with its own provisioning, pricing, and data pooling. This creates inefficiency for customers, who have to spend more time and money managing their data infrastructure.

It also imposes an integration tax on clients, who are charged separately for the computing and storage resources of each service.

Microsoft Fabric promises to remove this complexity by offering a single copy of data, a single experience, and a single interface.

How does Microsoft achieve this simplicity and unification with OneLake?

The key is that OneLake stores a single copy of all the data from the various Microsoft services in a common format, called (Apache) Parquet. It’s an open source file format widely used in the industry that organizes data by columns.

This facilitates and speeds up the consultation and analysis of the data. Whenever customers add or update data in their systems, Fabric automatically saves it to OneLake in Parquet format, regardless of its original format. This means that data can be accessed and queried from OneLake directly, without having to go through multiple sources or services.

For example, if a customer wants to use the Microsoft Power BI business intelligence tool to analyze data from the Microsoft Synapse Data Warehouse, they do not have to submit a query to Synapse. Power BI simply retrieves the data from OneLake. This reduces the number of queries across the services and lowers the cost to customers, who are charged for a single storage and data cube, instead of multiple ones.

Simplifies Data Sources

OneLake’s simplicity and unification also extends to data coming from outside the Microsoft ecosystem. OneLake stores its data tables in an open source format called Delta Lake, which creates a single metadata layer that converts raw data from various sources into a common format that can be parsed by any industry calculation engine.

Microsoft makes it easy for customers to transform data from third-party services with Data Factory, which offers more than 150 pre-built connectors.

Microsoft is also working on ways to automate the transformation process, instead of relying on the traditional and slow extract, transform, and load (ETL) method.

Microsoft Fabric can support multi-cloud scenarios. With a feature called Shortcuts, OneLake can virtualize Amazon S3 data storage and Google storage (coming soon).

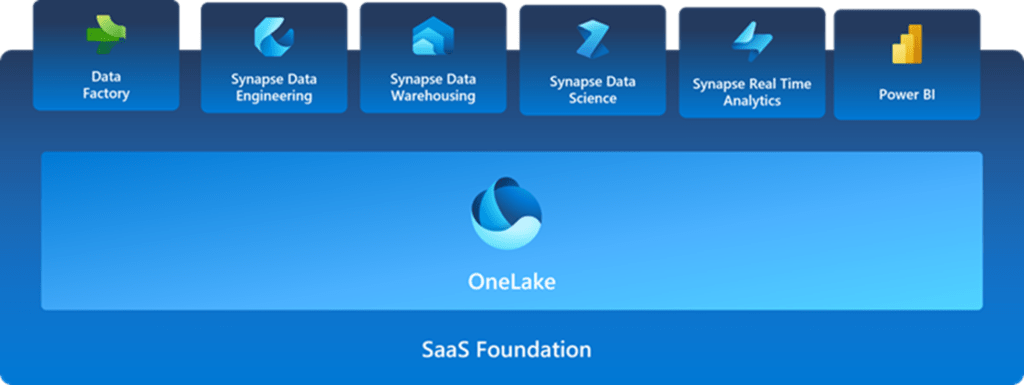

In 2019, Microsoft launched Synapse, which combined various services, such as Data Lake and Data Warehouse, into a single Hub. But Fabric is the ultimate integration, bringing Synapse, Power BI, and other data services together as a single software-as-a-service (SaaS) offering.

This means data engineers don’t have to deal with provisioning compute units, which simplifies their job. By connecting data sources, Fabric improves data consistency and reliability. By providing a single place to go, it’s like offering a single window to look across data management for security, governance, integration and discovery.

If customers want to apply security rules to their data, they can largely do so at the OneLake level. And all Fabric applications that access the data will have to follow those rules. The files will carry the same rules, they will even carry the same encryption if they are sent outside of the Microsoft Fabric.

What about Lakehouse?

One of the areas in which Microsoft has lagged behind some of its competitors is the so-called Lakehouse, which combines two technologies: a Data Lake (Data Lake) to store the data of a company and a Data Warehouse ( Data Mart) to analyze them.

This technology has become popular due to the rise of applications such as artificial intelligence, which require large amounts of data and analysis. Databricks has been a pioneer in this space.

Databricks, offering its support in Azure. Fabric closes the gap with Databricks and intends to surpass it. Fabric extends the open format pioneered by Databricks to the rest of Microsoft’s more comprehensive data stack.

Microsoft’s unified experience and its move to SaaS offerings could help Fabric’s Synapse make the final leap. Databricks is still a PaaS offering, which means data engineers still have to do more work and specify things like the number of nodes they want to run processing jobs on.

Microsoft Fabric combines its strength in business intelligence (Power BI) with data science, and adds other capabilities such as pattern detection and workflows. It is also trying to build a bridge between BI and AI.

And here the post today. If you liked it, you may be interested in our latest articles:

How to Configure pgbackrest in PostgreSQL to back up to Azure

Incremental Refresh & Real Time with Direct Query in Power BI

4 Essential Cybersecurity settings in Azure AD to avoid a Cyberattack

If you are working on a Data Analytics project, at Aleson ITC we can help you.

Marketing and Communications Specialist. International Trade, Business Management, SEO, PPC.